Procedural Multiscale Geometry Modeling using Implicit Surfaces

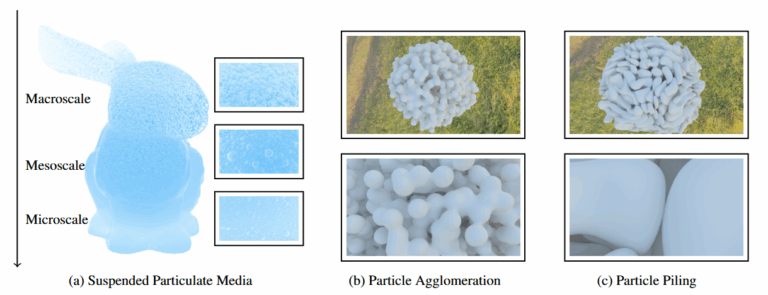

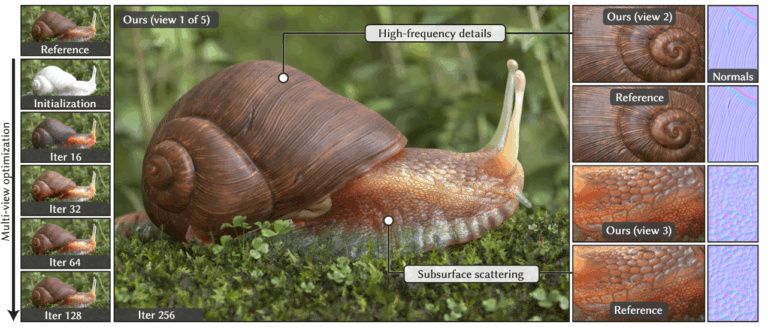

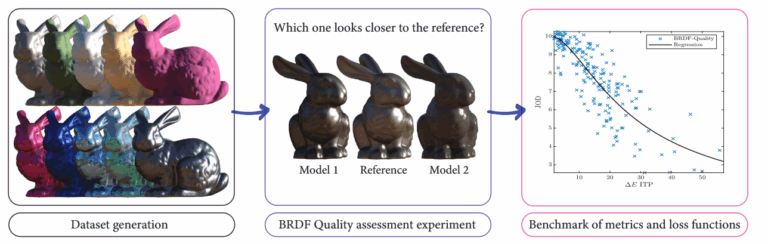

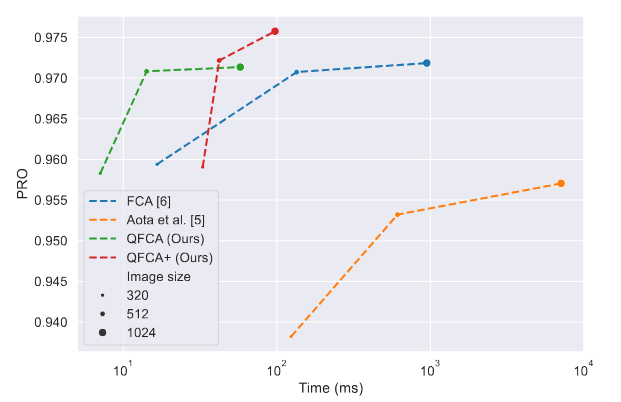

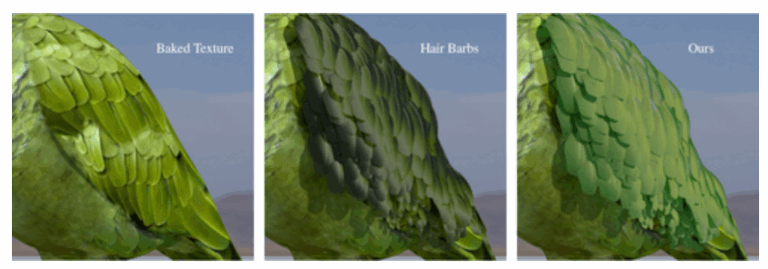

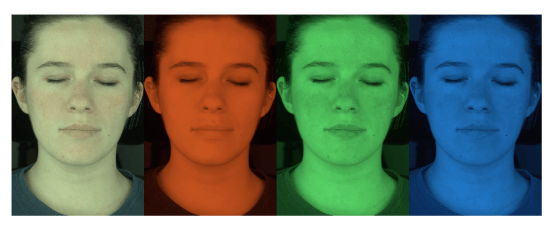

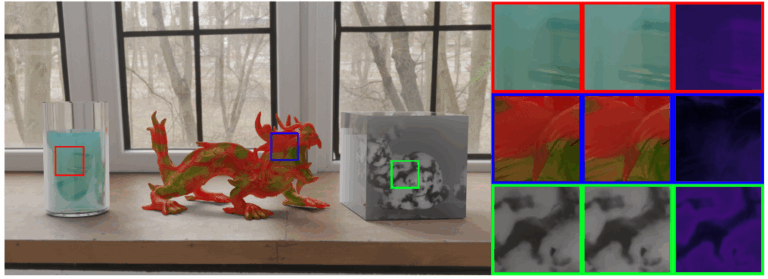

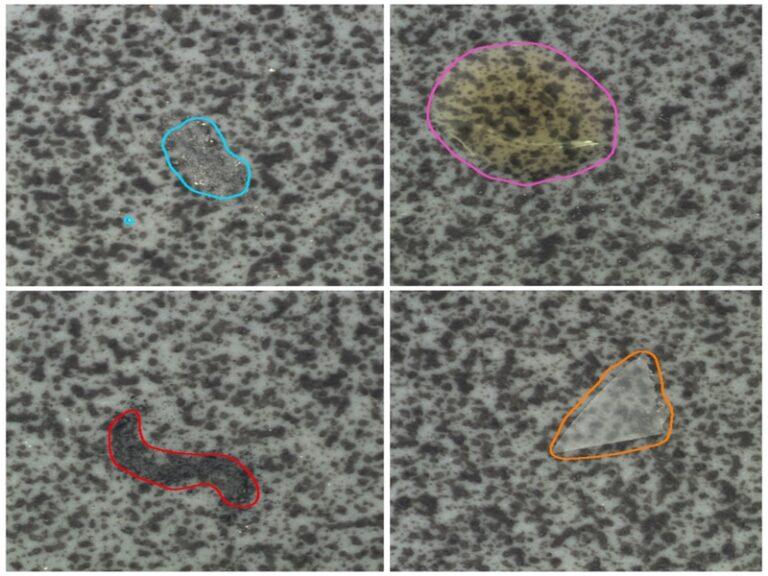

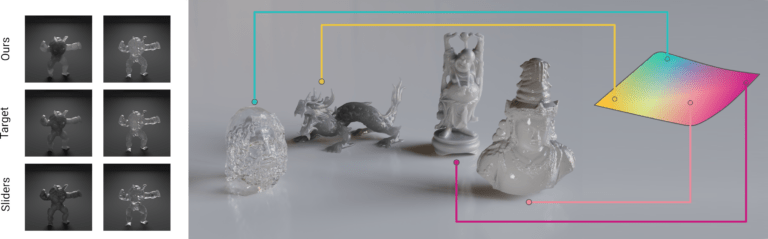

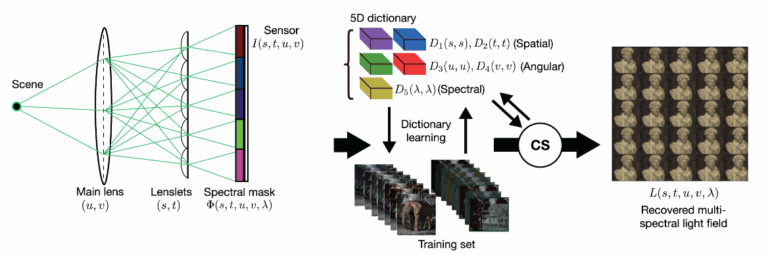

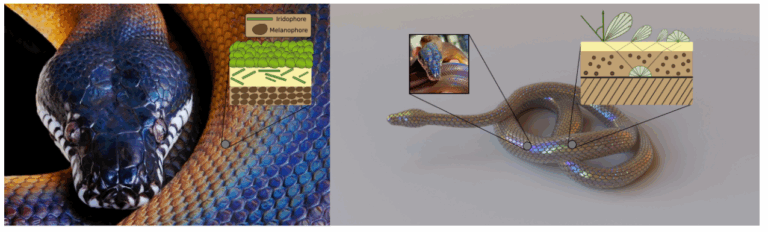

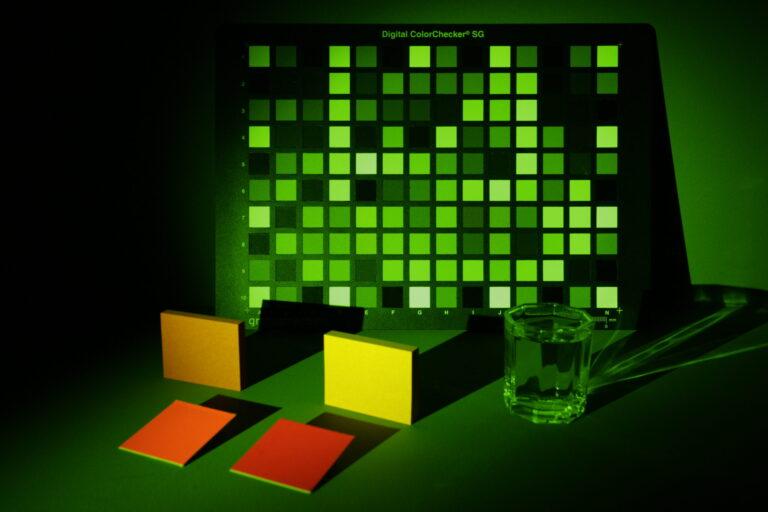

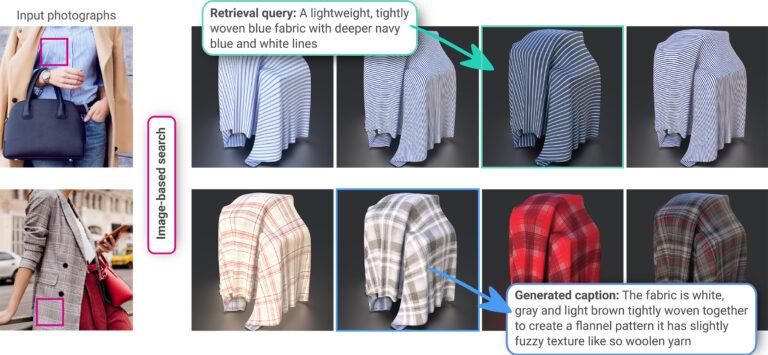

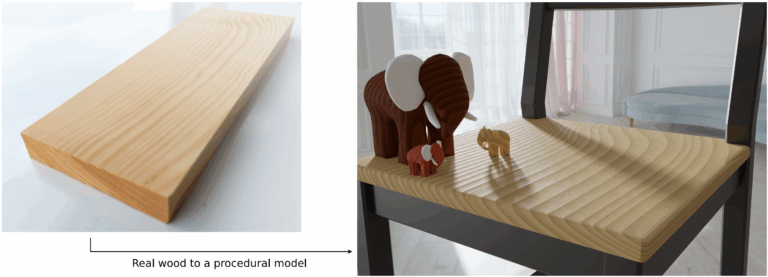

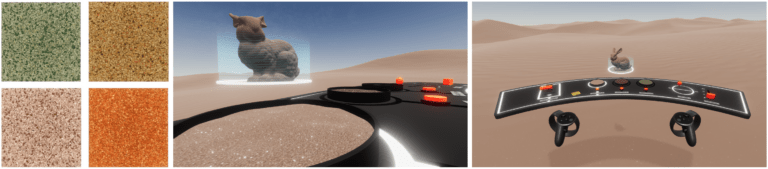

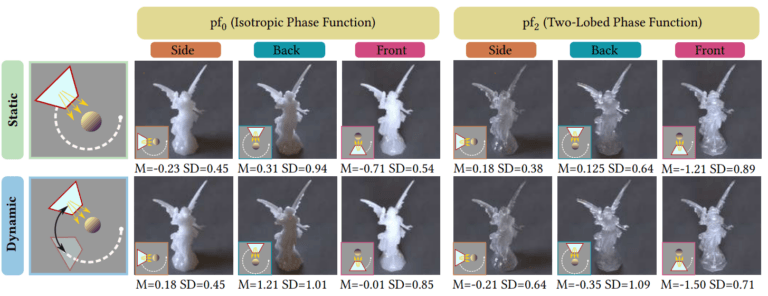

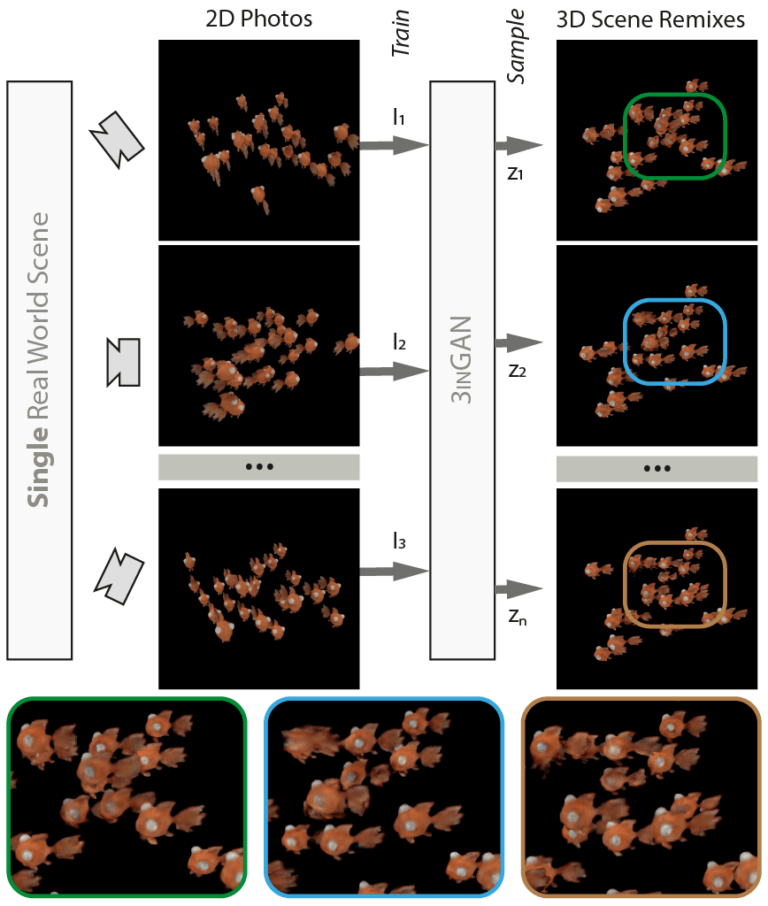

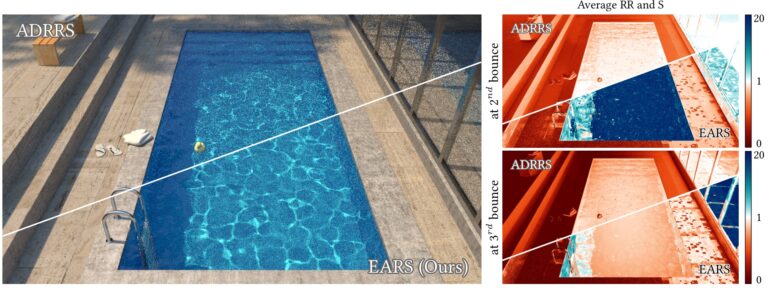

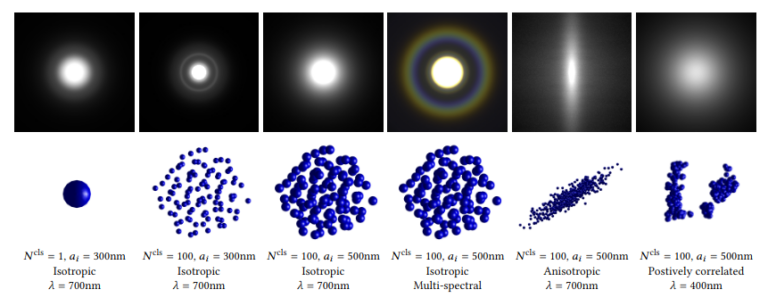

Materials exhibit geometric structures across mesoscopic to microscopic scales, influencing macroscale properties such as appearance, mechanical strength, and thermal behavior. Capturing and modeling these multiscale structures is challenging but essential for computer graphics, engineering, and materials science. We present a framework inspired by hypertexture methods, using implicit surfaces and sphere tracing to synthesize multiscale structures on the fly without precomputation. This framework models volumetric materials with particulate, fibrous, porous, and laminar structures, allowing control over size, shape, density, distribution, and orientation. We enhance structural diversity by superimposing implicit periodic functions while improving computational efficiency. The framework also supports spatially varying particulate media, particle agglomeration, and piling on convex and concave structures, such as rock formations (mesoscale), without explicit simulation. We demonstrate its potential in the appearance modeling of volumetric materials and investigate how spatially varying properties affect the perceived macroscale appearance. As a proof of concept, we show that microstructures created by our framework can be reconstructed from image and distance values defined by implicit surfaces, using both first-order and gradient-free optimization methods.