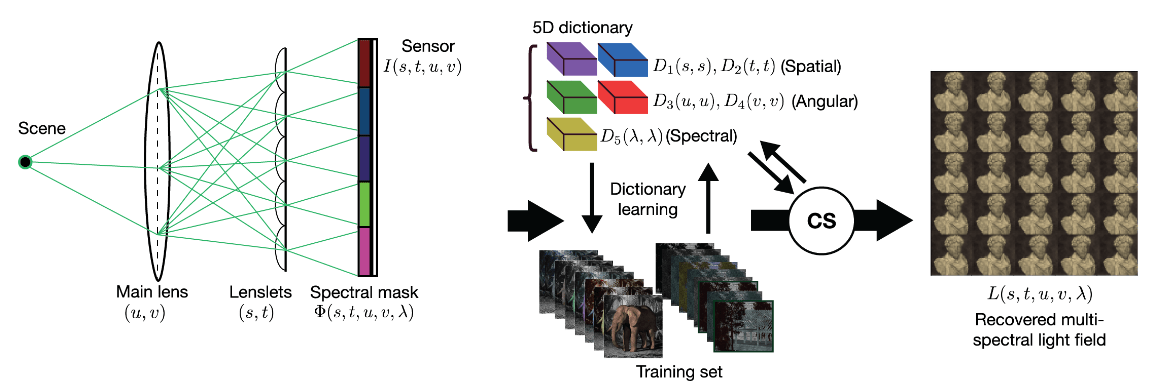

: This paper considers a compressive multi-spectral light field camera model that utilizes a one-hot spectral-coded mask and a microlens array to capture spatial, angular, and spectral information using a single monochrome sensor. We propose a model that employs compressed sensing techniques to reconstruct the complete multi-spectral light field from undersampled measurements. Unlike previous work where a light field is vectorized to a 1D signal, our method employs a 5D basis and a novel 5D measurement model, hence, matching the intrinsic dimensionality of multispectral light fields. We mathematically and empirically show the equivalence of 5D and 1D sensing models, and most importantly that the 5D framework achieves orders of magnitude faster reconstruction while requiring a small fraction of the memory. Moreover, our new multidimensional sensing model opens new research directions for designing efficient visual data acquisition algorithms and hardware.

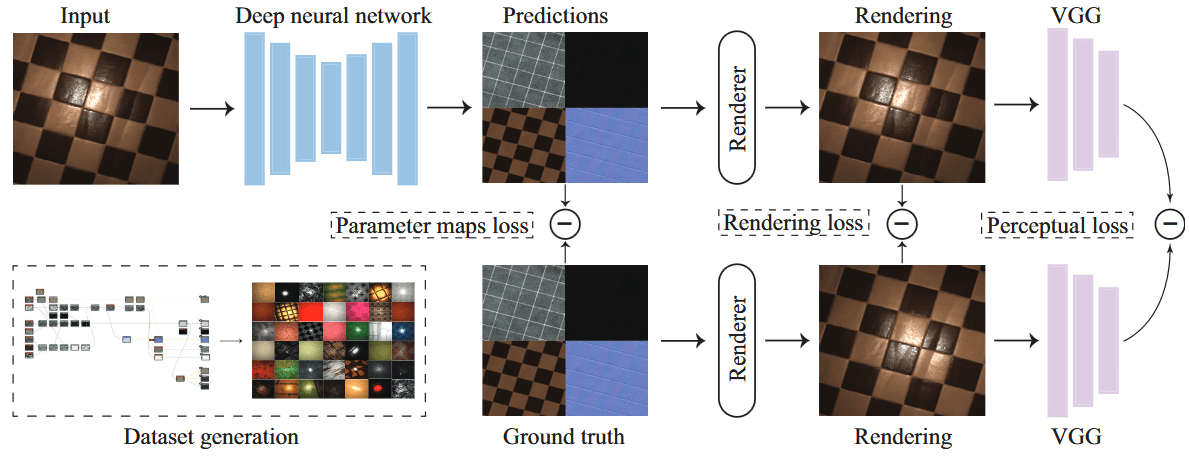

: Hand in hand with the rapid development of machine learning, deep learning and generative AI algorithms and architectures, the graphics community has seen a remarkable evolution of novel techniques for material and appearance capture. Typically, these machine-learning-driven methods and technologies, in contrast to traditional techniques, rely on only a single or very few input images, while enabling the recovery of detailed, high-quality measurements of bi-directional reflectance distribution functions, as well as the corresponding spatially varying material properties, also known as Spatially Varying Bi-directional Reflectance Distribution Functions (SVBRDFs). Learning-based approaches for appearance capture will play a key role in the development of new technologies that will exhibit a significant impact on virtually all domains of graphics. Therefore, to facilitate future research, this State-of-the-Art Report (STAR) presents an in-depth overview of the state-of-the-art in machine-learning-driven material capture in general, and focuses on SVBRDF acquisition in particular, due to its importance in accurately modelling complex light interaction properties of real-world materials. The overview includes a categorization of current methods along with a summary of each technique, an evaluation of their functionalities, their complexity in terms of acquisition requirements, computational aspects and usability constraints. The STAR is concluded by looking forward and summarizing open challenges in research and development toward predictive and general appearance capture in this field. A complete list of the methods and papers reviewed in this survey is available at computergraphics.on.liu.se/star_svbrdf_dl/.

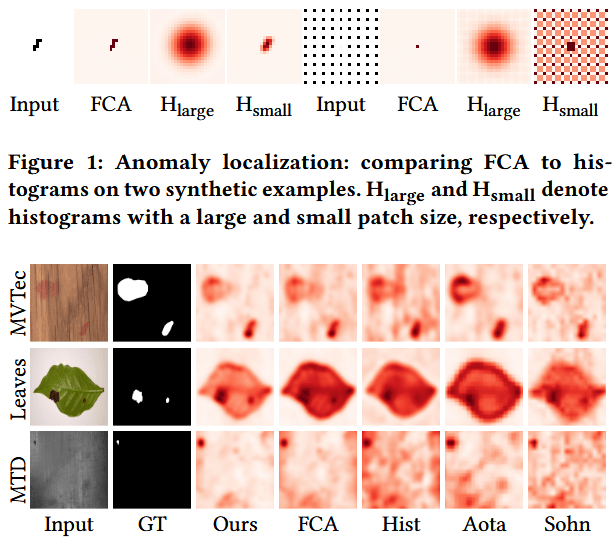

: The problem of detecting and localizing defects in images has been tackled with various approaches, including what are now called traditional computer vision techniques, as well as machine learning. Notably, most of these efforts have been directed toward the normality-supervised setting of this problem. That is, these algorithms assume the availability of a curated set of normal images, known to not contain any anomalies. The anomaly-free images constitute reference data, used to detect anomalies in a one-class classification setting. While this kind of data is easier to acquire than anomaly-annotated images, it is still costly or difficult to obtain in-domain data for certain applications. We address the problem of anomaly detection and localization under a training-set-free paradigm and do not require any anomaly-free reference data. Concretely, we introduced a truly zero-shot method that can localize anomalies in a single image of a previously unobserved texture class. Then, we develop a mechanism to leverage additional test images, which may contain anomalies. Furthermore, we extend our analysis to also include a categorization of the anomalies in the given population through clustering. Importantly, we focus our attention on textures and texture-like images as we develop an anomaly detection method for structural defects, rather than logical anomalies. This aligns with the proposed setting, which avoids the supervisory signal generally needed for detecting logical and semantical anomalies. This poster summarizes our recent line of research on localization and classification of anomalies in real-world texture images.

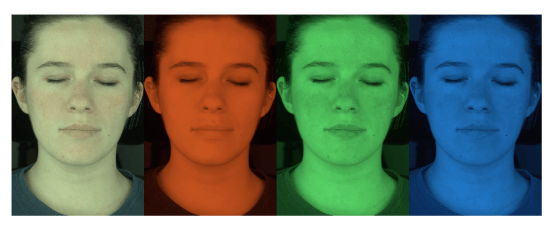

: Biophysical skin appearance modeling has previously focused on spectral absorption and scattering due to chromophores in various skin layers. In this work, we extend recent practical skin appearance measurement methods employing RGB illumination to provide a novel estimate of skin fluorescence, as well as direct measurements of two parameters related to blood distribution in skin ± blood volume fraction, and blood oxygenation. The proposed method involves the acquisition of RGB facial skin reflectance responses under RGB illumination produced by regular desktop LCD screens. Unlike previous works that have employed hyperspectral imaging for this purpose, we demonstrate successful isolation of elastin-related fluorescence, as well as blood distributions in capillaries and veins using our practical RGB imaging procedure.

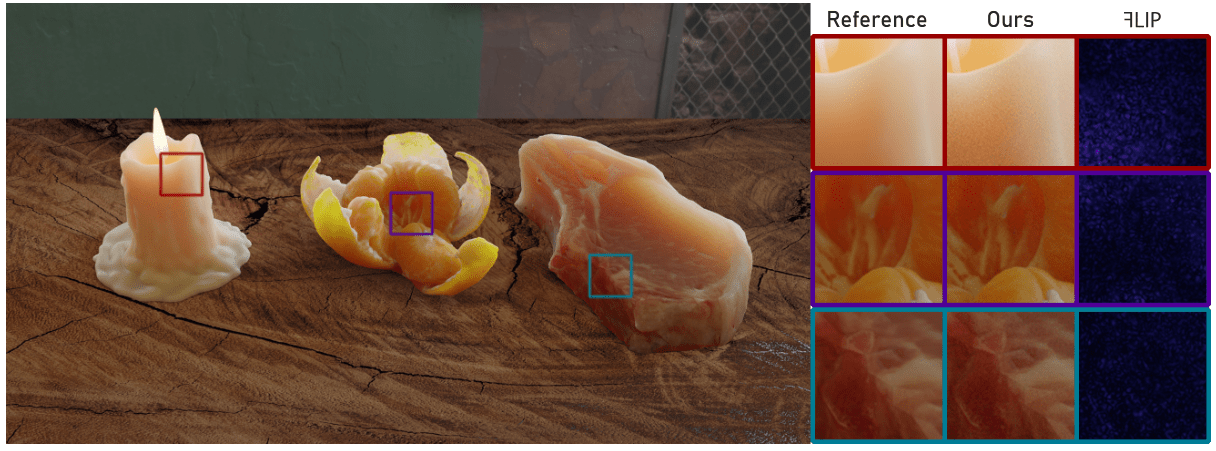

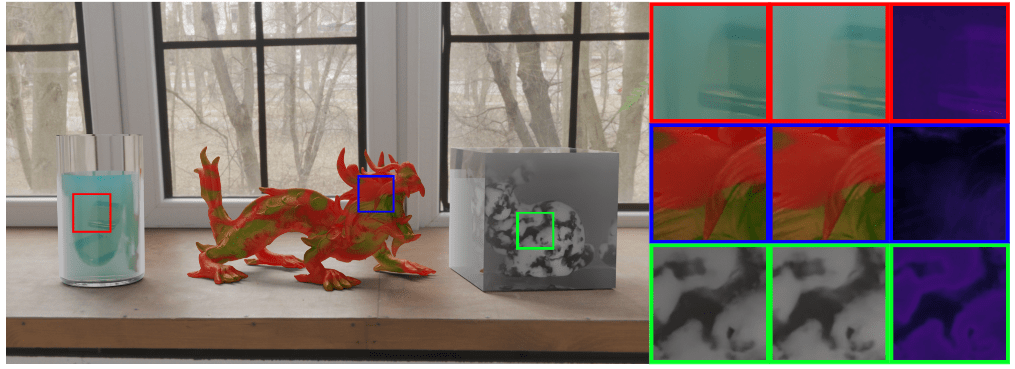

: We present a method for capturing the BSSRDF (bidirectional scattering-surface reflectance distribution function) of arbitrary geometry with a neural network. We demonstrate how a compact neural network can represent the full 8-dimensional light transport within an object including heterogeneous scattering. We develop an efficient rendering method using importance sampling that is able to render complex translucent objects under arbitrary lighting. Our method can also leverage the common planar half-space assumption, which allows it to represent one BSSRDF model that can be used across a variety of geometries. Our results demonstrate that we can render heterogeneous translucent objects under arbitrary lighting and obtain results that match the reference rendered using volumetric path tracing.

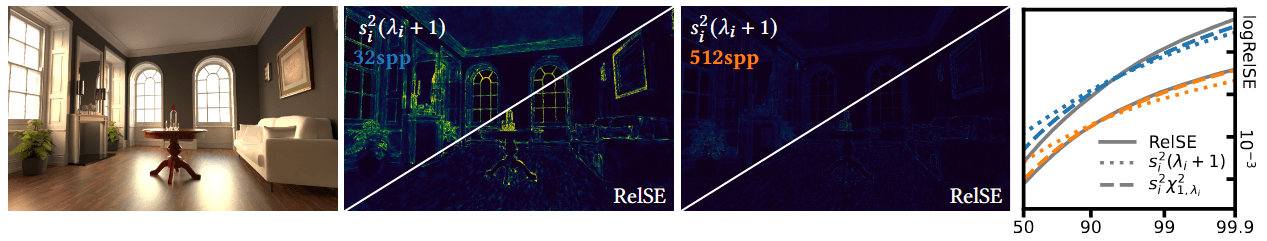

: We present a practical global error estimation technique for Monte Carlo ray tracing combined with deep learning based denoising. Our method uses aggregated estimates of bias and variance to determine the squared error distribution of the pixels. Unlike unbiased estimates for classical Monte Carlo ray tracing, this distribution follows a noncentral chi-squared distribution, under reasonable assumptions. Based on this, we develop a stopping criterion for denoised Monte Carlo image synthesis that terminates rendering once a user specified error threshold has been achieved. Our results demonstrate that our error estimate and stopping criterion work well on a variety of scenes, and that we are able to achieve a given error threshold without the user specifying the number of samples needed.

: Monte Carlo rendering of translucent objects with heterogeneous scattering properties is often expensive both in terms of memory and computation. If the scattering properties are described by a 3D texture, memory consumption is high. If we do path tracing and use a high dynamic range lighting environment, the computational cost of the rendering can easily become significant. We propose a compact and efficient neural method for representing and rendering the appearance of heterogeneous translucent objects. Instead of assuming only surface variation of optical properties, our method represents the appearance of a full object taking its geometry and volumetric heterogeneities into account. This is similar to a neural radiance field, but our representation works for an arbitrary distant lighting environment. In a sense, we present a version of neural precomputed radiance transfer that captures relighting of heterogeneous translucent objects. We use a multi-layer perceptron (MLP) with skip connections to represent the appearance of an object as a function of spatial position, direction of observation, and direction of incidence. The latter is considered a directional light incident across the entire non-self-shadowed part of the object. We demonstrate the ability of our method to compactly store highly complex materials while having high accuracy when comparing to reference images of the represented object in unseen lighting environments. As compared with path tracing of a heterogeneous light scattering volume behind a refractive interface, our method more easily enables importance sampling of the directions of incidence and can be integrated into existing rendering frameworks while achieving interactive frame rates.

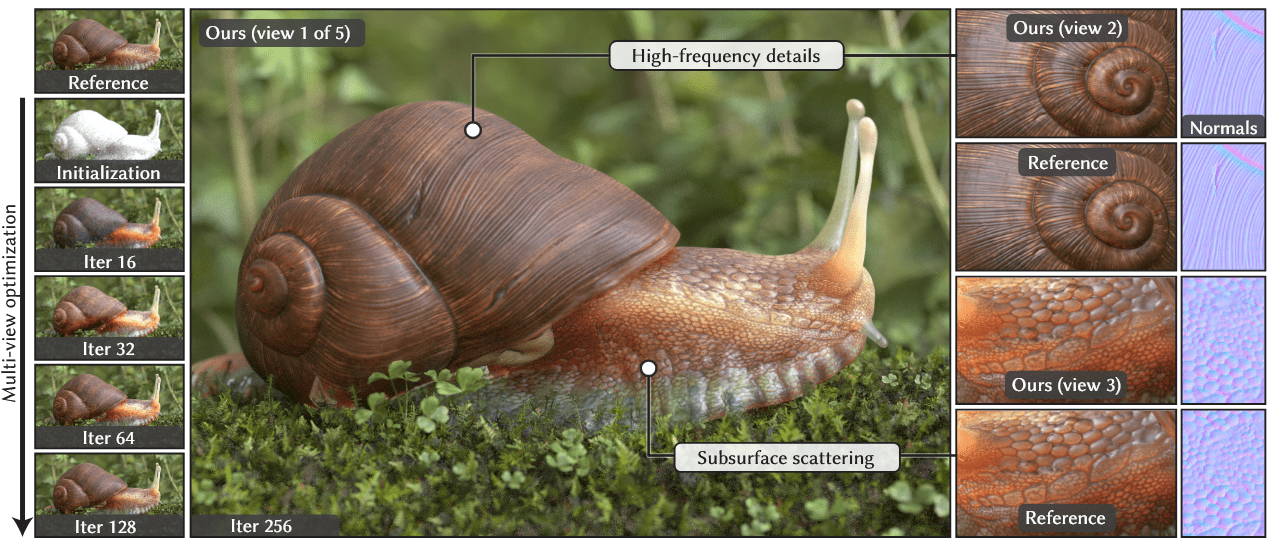

: Inverse rendering has emerged as a standard tool to reconstruct the parameters of appearance models from images (e.g., textured BSDFs). In this work, we present several novel contributions motivated by the practical challenges of recovering high-resolution surface appearance textures, including spatially-varying subsurface scattering parameters.

First, we propose Laplacian mipmapping, which combines differentiable mipmapping and a Laplacian pyramid representation into an effective preconditioner. This seemingly simple technique significantly improves the quality of recovered surface textures on a set of challenging inverse rendering problems. Our method automatically adapts to the render and texture resolutions, only incurs moderate computational cost and achieves better quality than prior work while using fewer hyperparameters. Second, we introduce a specialized gradient computation algorithm for textured, path-traced subsurface scattering, which facilitates faithful reconstruction of translucent materials. By using path tracing, we enable the recovery of complex appearance while avoiding the approximations of the previously used diffusion dipole methods. Third, we demonstrate the application of both these techniques to reconstructing the textured appearance of human faces from sparse captures. Our method recovers high-quality relightable appearance parameters that are compatible with current production renderers.

In the domain of wood modeling, we present a new complex appearance model, coupled with a user-friendly NLP-based frontend for intuitive interactivity. First, we present a procedurally generated wood model that is capable of accurately simulating intricate wood characteristics, including growth rings, vessels/pores, rays, knots, and figure. Furthermore, newly developed features were introduced, including brushiness distortion, influence points, and individual feature control. These novel enhancements facilitate a more precise matching between procedurally generated wood and ground truth images. Second, we present a text-based user interface that relies on a trained natural language processing model that is designed to map user plain English requests into the parameter space of our procedurally generated wood model. This significantly reduces the complexity of the authoring process, thereby enabling any user, regardless of their level of woodworking expertise or familiarity with procedurally generated materials, to utilize it to its fullest potential.

Lucia Tódová, Alexander Wilkie, Luca Fascione June 2021 Eurographics Symposium on Rendering (EGSR) Abstract We propose a technique to efficiently importance sample and store fluorescent spectral data. Fluorescence behaviour is properly represented as a re-radiation matrix: for a given input …