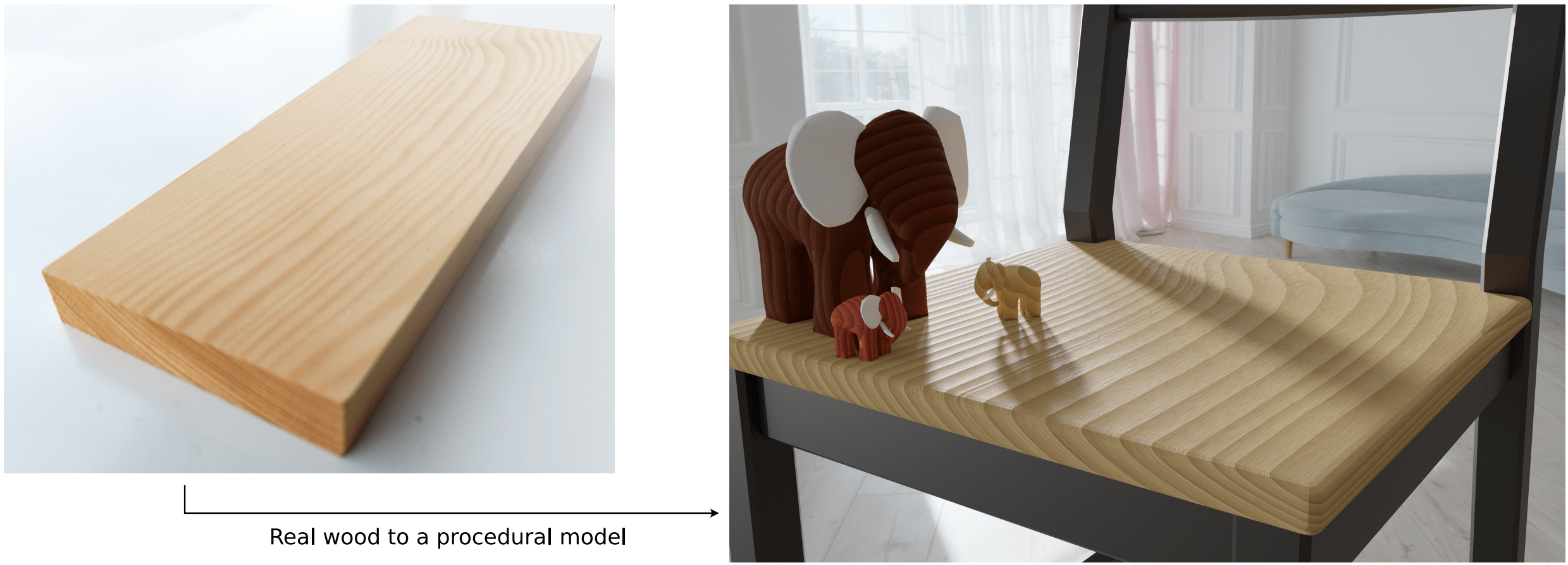

Wood is a volumetric material with a very large appearance gamut that is further enlarged by numerous finishing techniques. Computer graphics has made considerable progress in creating sophisticated and flexible appearance models that allow convincing renderings of wooden materials. However, these do not yet allow fully automatic appearance matching to a concrete exemplar piece of wood, and have to be fine-tuned by hand. More general appearance matching strategies are incapable of reconstructing anatomically meaningful volumetric information. This is essential for applications where the internal structure of wood is significant, such as non-planar furniture parts machined from a solid block of wood, translucent appearance of thin wooden layers, or in the field of dendrochronology. In this paper, we provide the two key ingredients for automatic matching of a procedural wood appearance model to exemplar photographs: a good initialization, built on detecting and modelling the ring structure, and a phase-based loss function that allows to accurately recover growth ring deformations and gives anatomically meaningful results. Our ring-detection technique is based on curved Gabor filters, and robustly works for a considerable range of wood types.

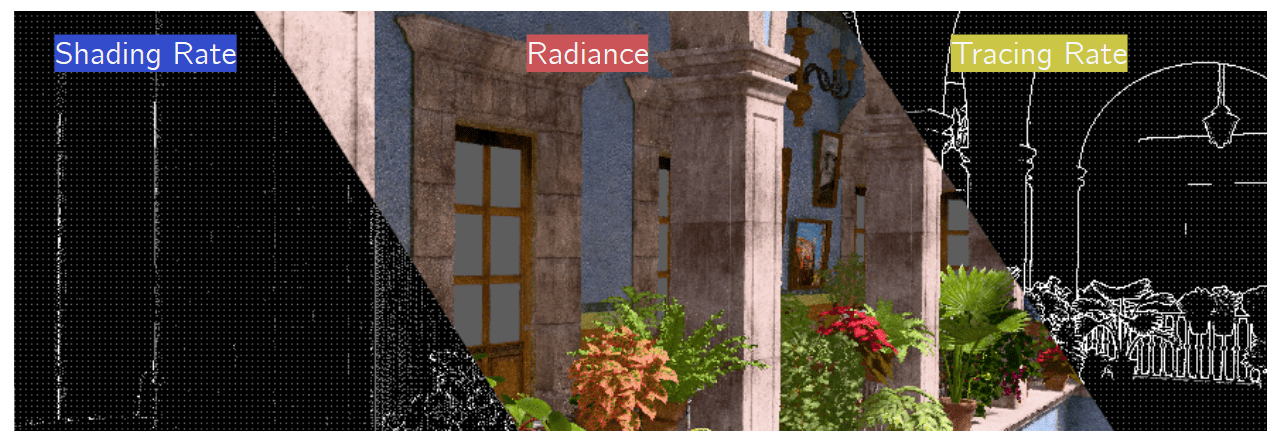

We present an algorithm for interactive stereoscopic ray tracing that decouples visibility from shading and enables caching of radiance results for temporally stable and stereoscopically consistent rendering. With an outset in interactive stable ray tracing, we build a screen space cache that carries surface samples from frame to frame via forward reprojection. Using a visibility heuristic, we adaptively trace the samples and achieve high performance with little temporal artefacts. Our method also serves as a shading cache, which enables temporal reuse and filtering of shading results in virtual reality (VR). We demonstrate good antialiasing and temporal coherence when filtering geometric edges. We compare our sample-based radiance caching that operates in screen space with temporal antialiasing (TAA) and a hash-based shading cache that operates in a voxel representation of world space. In addition, we show how to extend the shading cache into a radiance cache. Finally, we use the per-sample radiance values to improve stereo vision by employing stereo blending with improved estimates of the blending parameter between the two views.

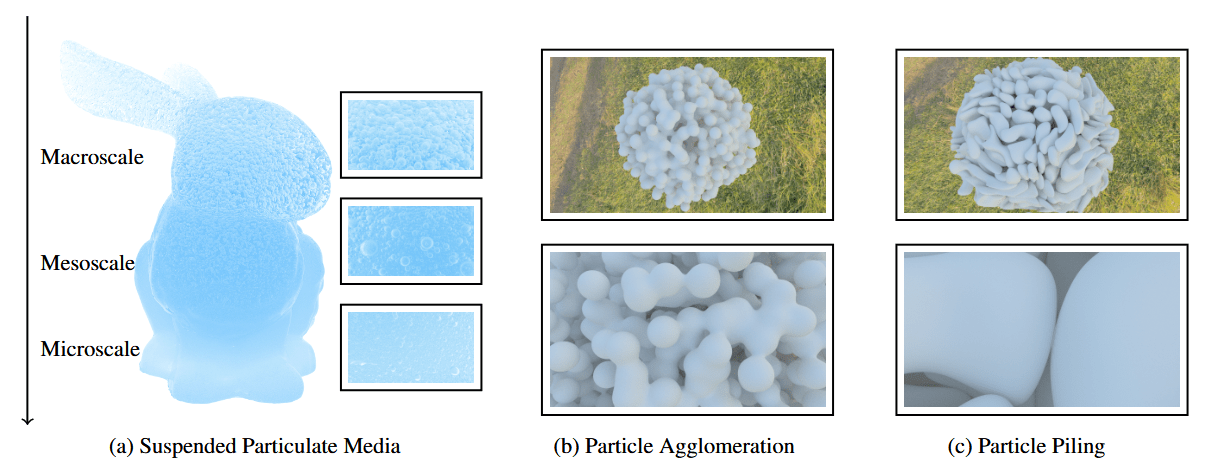

Materials exhibit geometric structures across mesoscopic to microscopic scales, influencing macroscale properties such as appearance, mechanical strength, and thermal behavior. Capturing and modeling these multiscale structures is challenging but essential for computer graphics, engineering, and materials science. We present a framework inspired by hypertexture methods, using implicit surfaces and sphere tracing to synthesize multiscale structures on the fly without precomputation. This framework models volumetric materials with particulate, fibrous, porous, and laminar structures, allowing control over size, shape, density, distribution, and orientation. We enhance structural diversity by superimposing implicit periodic functions while improving computational efficiency. The framework also supports spatially varying particulate media, particle agglomeration, and piling on convex and concave structures, such as rock formations (mesoscale), without explicit simulation. We demonstrate its potential in the appearance modeling of volumetric materials and investigate how spatially varying properties affect the perceived macroscale appearance. As a proof of concept, we show that microstructures created by our framework can be reconstructed from image and distance values defined by implicit surfaces, using both first-order and gradient-free optimization methods.

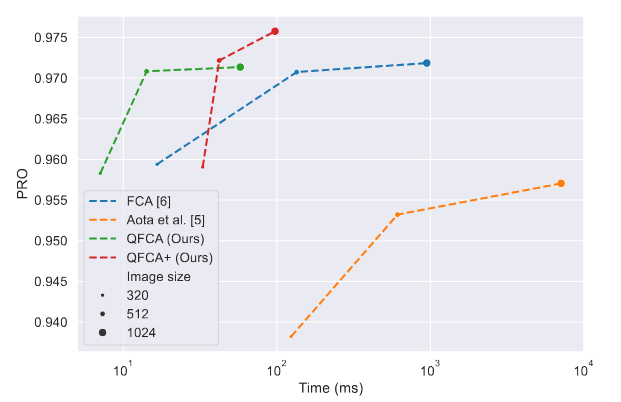

Zero-shot anomaly localization is a rising field in computer vision research, with important progress in recent years. This work focuses on the problem of detecting and localizing anomalies in textures, where anomalies can be defined as the regions that deviate from the overall statistics, violating the stationarity assumption. The main limitation of existing methods is their high running time, making them impractical for deployment in real-world scenarios, such as assembly line monitoring. We propose a real-time method, named QFCA, which implements a quantized version of the feature correspondence analysis (FCA) algorithm. By carefully adapting the patch statistics comparison to work on histograms of quantized values, we obtain a 10× speedup with little to no loss in accuracy. Moreover, we introduce a feature preprocessing step based on principal component analysis, which

enhances the contrast between normal and anomalous features, improving the detection precision on complex textures. Our method is thoroughly evaluated against prior art, comparing favorably with existing methods.

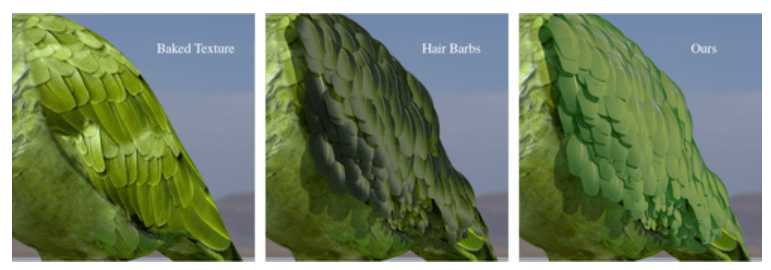

: The appearance of a real-world feather results from the complex interaction of light with its multi-scale biological structure, including the central shaft, branching barbs, and interlocking barbules on those barbs. In this work, we propose a practical surface-based appearance model for feathers. We represent the far-field appearance of feathers using a BSDF that implicitly represents the light scattering from the main biological structures of a feather, such as the shaft, barb and barbules. Our model accounts for the particular characteristics of feather barbs such as the non-cylindrical cross-sections and the scattering media via a numerically-based BCSDF. To model the relative visibility between barbs and barbules, we derive a masking term for the differential projected areas of the different components of the feather’s microgeometry, which allows us to analytically compute the masking between barbs and barbules. As opposed to previous works, our model uses a lightweight representation of the geometry based on a 2D texture, and does not require explicitly representing the barbs as curves. We show the flexibility and potential of our appearance model approach to represent the most important visual features of several pennaceous feathers.

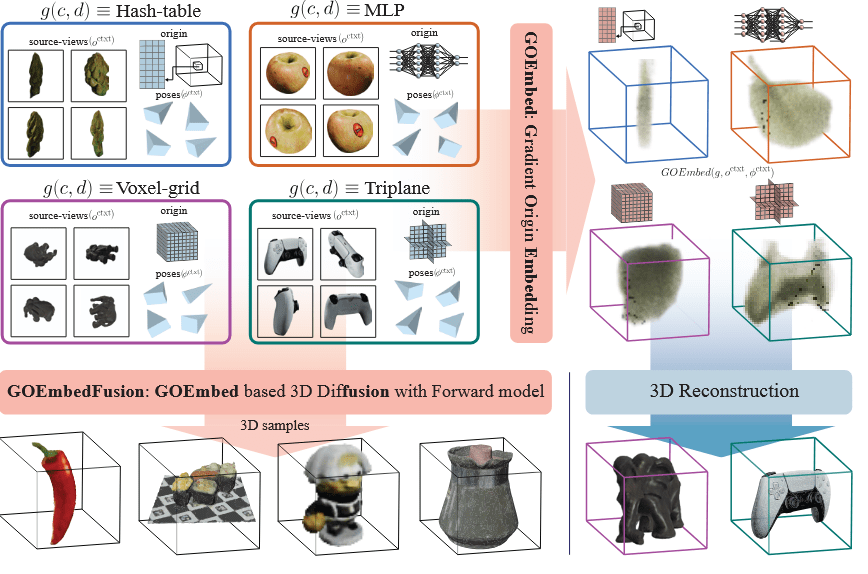

: Encoding information from 2D views of an object into a 3D representation is crucial for generalized 3D feature extraction. Such features can then enable 3D reconstruction, 3D generation, and other applications. We propose GOEmbed (Gradient Origin Embeddings) that encodes input 2D images into any 3D representation, without requiring a pre-trained image feature extractor; unlike typical prior approaches in which input images are either encoded using 2D features extracted from large pre-trained models, or customized features are designed to handle different 3D representations; or worse, encoders may not yet be available for specialized 3D neural representations such as MLPs and hash-grids. We extensively evaluate our proposed GOEmbed under different experimental settings on the OmniObject3D benchmark. First, we evaluate how well the mechanism compares against prior encoding mechanisms on multiple 3D representations using an illustrative experiment called Plenoptic-Encoding. Second, the efficacy of the GOEmbed mechanism is further demonstrated by achieving a new SOTA FID of 22.12 on the OmniObject3D generation task using a combination of GOEmbed and DFM (Diffusion with Forward Models), which we call GOEmbedFusion. Finally, we evaluate how the GOEmbed mechanism bolsters sparse-view 3D reconstruction pipelines.

>>authors: Animesh Karnewar, Roman Shapovalov, Tom Monnier, Andrea Vedaldi, Niloy J. Mitra, David Novotny

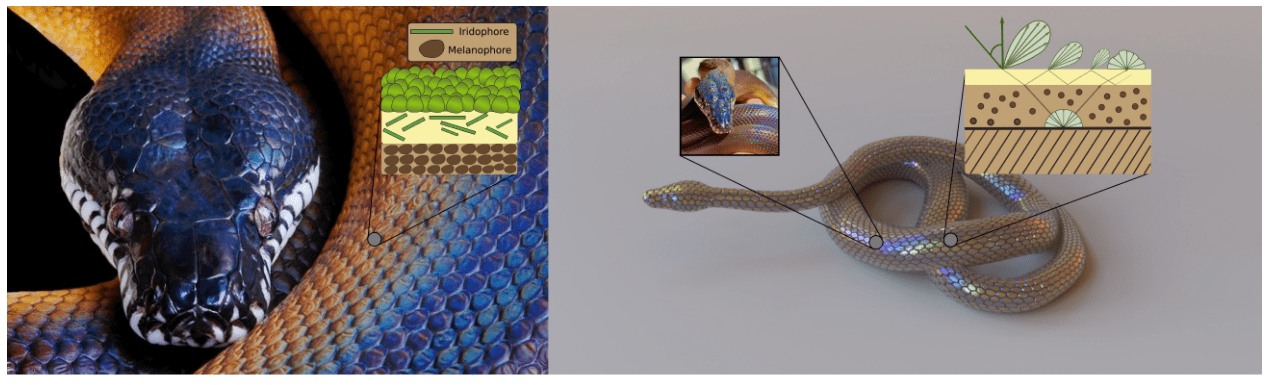

: Simulating the light transport on biological tissues is a longstanding challenge, given its complex multilayered structure. In biology, one of the most remarkable and studied examples of tissues are the scales that cover the skin of reptiles, which present a combination of photonic structures and pigmentation. This is, however, a somewhat ignored problem in computer graphics. In this work, we propose a multilayered appearance model based on the anatomy of the snake skin. Some snakes are known for their striking, highly iridescent scales resulting from light interference. We model snake skin as a two-layered reflectance function: The top layer is a thin layer resulting on a specular iridescent reflection, while the bottom layer is a diffuse highlyabsorbing layer, that results into a dark diffuse appearance that maximizes the iridescent color of the skin. We demonstrate our layered material on a wide range of appearances, and show that our model is able to qualitatively match the appearance of snake skin.

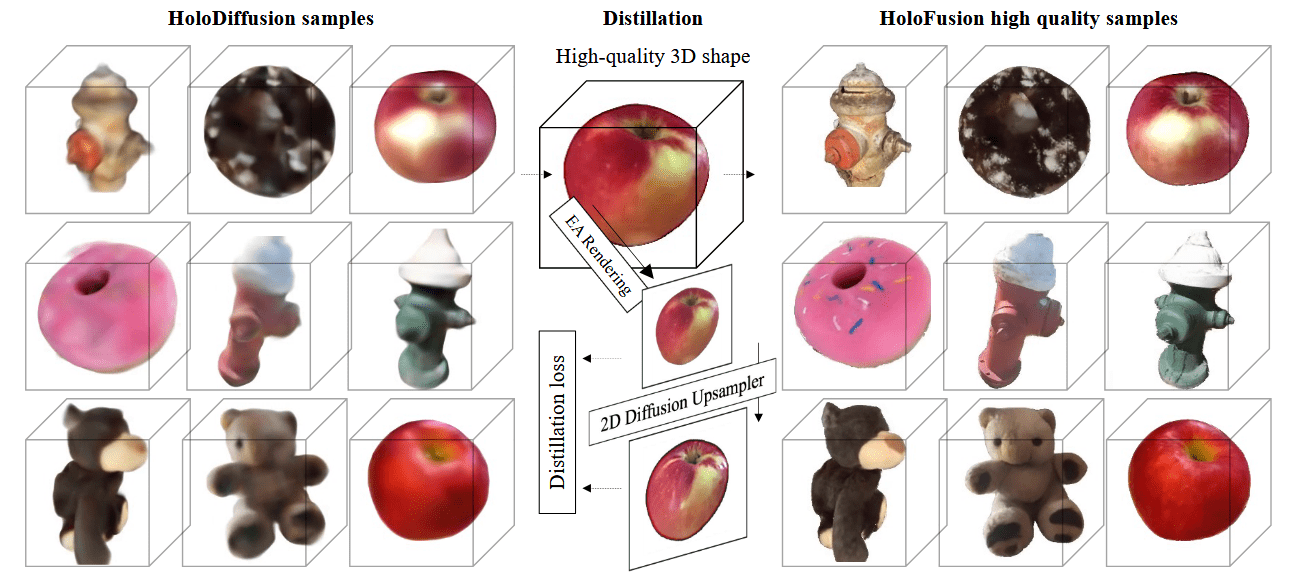

: Diffusion-based image generators can now produce high-quality and diverse samples, but their success has yet to fully translate to 3D generation: existing diffusion methods can either generate low-resolution but 3D consistent outputs, or detailed 2D views of 3D objects but with potential structural defects and lacking view consistency or realism. We present HoloFusion, a method that combines the best of these approaches to produce high-fidelity, plausible, and diverse 3D samples while learning from a collection of multi-view 2D images only. The method first generates coarse 3D samples using a variant of the recently proposed HoloDiffusion generator. Then, it independently renders and upsamples a large number of views of the coarse 3D model, super-resolves them to add detail, and distills those into a single, high-fidelity implicit 3D representation, which also ensures view-consistency of the final renders. The super-resolution network is trained as an integral part of HoloFusion, end-to-end, and the final distillation uses a new sampling scheme to capture the space of super-resolved signals. We compare our method against existing baselines, including DreamFusion, Get3D, EG3D, and HoloDiffusion, and achieve, to the best of our knowledge, the most realistic results on the challenging CO3Dv2 dataset.

>>authors: Animesh Karnewar, Niloy J. Mitra, Andrea Vedaldi, David Novotny

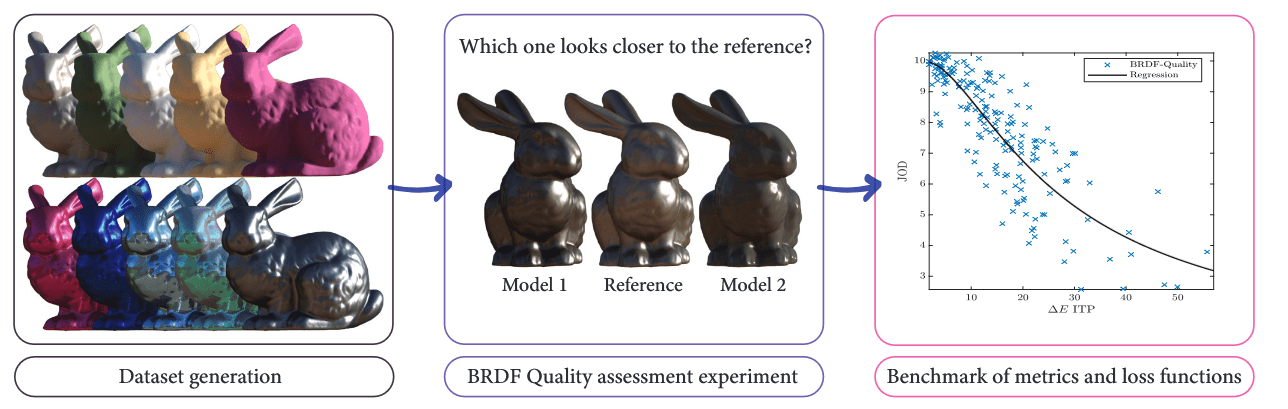

: Material appearance is commonly modeled with the Bidirectional Reflectance Distribution Functions (BRDFs), which need to trade accuracy for complexity and storage cost. To investigate the current practices of BRDF modeling, we collect the first high dynamic range stereoscopic video dataset that captures the perceived quality degradation with respect to a number of parametric and non-parametric BRDF models. Our dataset shows that the current loss functions used to fit BRDF models, such as mean-squared error of logarithmic reflectance values, correlate poorly with the perceived quality of materials in rendered videos. We further show that quality metrics that compare rendered material samples give a significantly higher correlation with subjective quality judgments, and a simple Euclidean distance in the ITP color space (ΔEITP) shows the highest correlation. Additionally, we investigate the use of different BRDF-space metrics as loss functions for fitting BRDF models and find that logarithmic mapping is the most effective approach for BRDF-space loss functions.

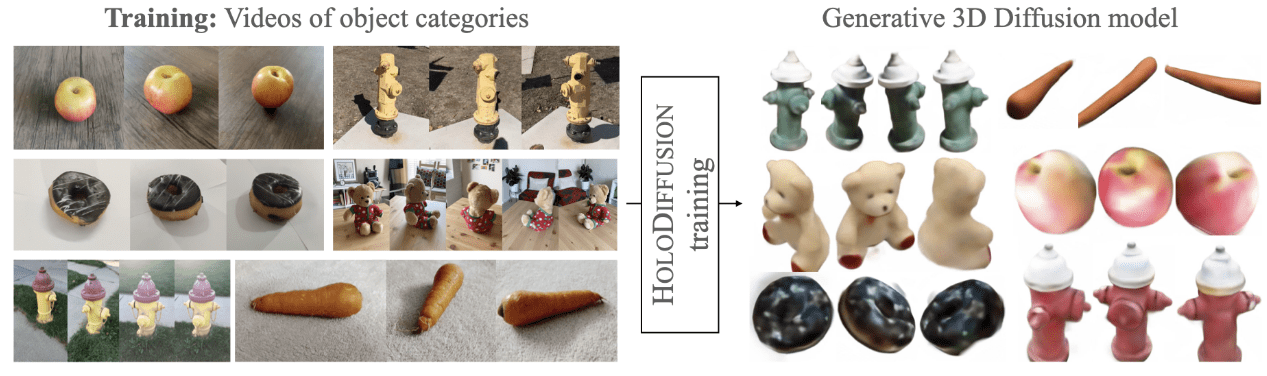

: Diffusion models have emerged as the best approach for generative modeling of 2D images. Part of their success is due to the possibility of training them on millions if not billions of images with a stable learning objective. However, extending these models to 3D remains difficult for two reasons. First, finding a large quantity of 3D training data is much more complex than for 2D images. Second, while it is conceptually trivial to extend the models to operate on 3D rather than 2D grids, the associated cubic growth in memory and compute complexity makes this infeasible. We address the first challenge by introducing a new diffusion setup that can be trained, end-to-end, with only posed 2D images for supervision; and the second challenge by proposing an image formation model that decouples model memory from spatial memory. We evaluate our method on real-world data, using the CO3D dataset which has not been used to train 3D generative models before. We show that our diffusion models are scalable, train robustly, and are competitive in terms of sample quality and fidelity to existing approaches for 3D generative modeling.